Addressing Today’s Challenges

Clinical summary reports play a critical role in drug development, providing a comprehensive overview of the drug's clinical trial data. Accurate data and summaries are necessary to obtain regulatory approval for marketing the drug to patients in need.

To validate the accuracy of clinical trial results, independent programmers (a double programming technique) need to create separate programs to perform quality checks. Similar outputs are required for both program results (the main program and the quality check program) to ensure accuracy and eliminate human errors.

Despite claims of unbiased QC and equal values, the accuracy of clinical analysis results is not always guaranteed. Additionally, this double programming technique becomes challenging when a multitude of data in large volumes must be handled. It is imperative to have a senior statistical resource that can question the specifications at every stage.

Statistical Computing Environments (SCEs) have long facilitated double programming techniques. But data accuracy is limited by their reliance on traditional approaches. The need for a paradigm shift is evident—a call for innovation that transcends conventional boundaries.

Foreseeing Tomorrow’s Necessity

The double programming technique is considered the gold standard to ensure the accuracy of clinical trial analysis results. However, the challenges highlighted prove that the results will not always be 100% accurate despite the efforts involved.

To make the best use of the resources available despite all the constraints, an alternative validation model becomes a requisite. By doing so, data quality checks can be conducted more efficiently and consistently, resulting in accurate clinical trial analysis reports.

To ignite a transformative shift in improving the efficiency of data validation, Care2Data proudly presents  -an avant-garde, high-octane intelligent data validation platform. With its unmatched performance,

-an avant-garde, high-octane intelligent data validation platform. With its unmatched performance,  redefines the standards of quality, consistency, and accuracy in data validation.

redefines the standards of quality, consistency, and accuracy in data validation.

: Innovating for Impact

: Innovating for Impact

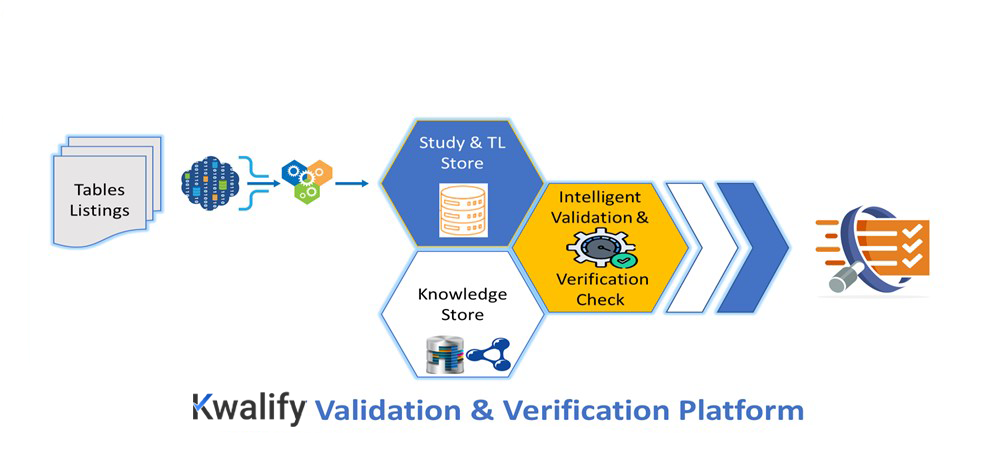

is a data validation and verification platform designed to identify potential discrepancies in data to ensure accuracy in clinical trial analysis results. It can proficiently offer intelligent data mapping using data triplets and can handle validation throughout the data flow in a trial.

is a data validation and verification platform designed to identify potential discrepancies in data to ensure accuracy in clinical trial analysis results. It can proficiently offer intelligent data mapping using data triplets and can handle validation throughout the data flow in a trial.

With all these elements joining forces,  is a catalyst for data validation. It delivers Data Quality as a Service using innovative techniques with accuracy and impact.

is a catalyst for data validation. It delivers Data Quality as a Service using innovative techniques with accuracy and impact.

Machines usually have difficulty understanding the inter-relationships within the data that are obvious to humans. This is where  ’s semantic intelligence helps detect relationships between data through multiple types of validation techniques (horizontal, vertical, cross-table, reference, etc.). This measure can guarantee precise data checks in every combination possible to ensure data quality.

’s semantic intelligence helps detect relationships between data through multiple types of validation techniques (horizontal, vertical, cross-table, reference, etc.). This measure can guarantee precise data checks in every combination possible to ensure data quality.

Metadata from a study help determine recurring vocabularies and hierarchies. The knowledge graph used in  handles this interconnected information. A smart semantic framework stores this knowledge graph in a FAIR-compliant way. This helps

handles this interconnected information. A smart semantic framework stores this knowledge graph in a FAIR-compliant way. This helps  continuously learn and become smarter over time.

continuously learn and become smarter over time.

Demo: Witness the Future in Action

In our live demo, experience the transformative impact of  in action as it intelligently validates the data provided through multiple validation techniques.

in action as it intelligently validates the data provided through multiple validation techniques.

Feel free to explore the platform and provide your feedback. Your insights are valuable to help enhance  and deliver the best possible experience.

and deliver the best possible experience.

Regulatory Compliance

is designed architecturally to meet 21 CFR Part 11 compliance, and necessary evidence to demonstrate regulatory compliance is available in a report form, which can be verified & filed at the time of Installation & Commissioning of the System.

is designed architecturally to meet 21 CFR Part 11 compliance, and necessary evidence to demonstrate regulatory compliance is available in a report form, which can be verified & filed at the time of Installation & Commissioning of the System.

Frequently Asked Questions (FAQs)

-

How does

automate the validation process ?

automate the validation process ?

utilizes advanced intelligent techniques to automate data validation using semantics, reducing the reliance on manual intervention, and minimizing the risk of human errors.

utilizes advanced intelligent techniques to automate data validation using semantics, reducing the reliance on manual intervention, and minimizing the risk of human errors. - Can

adapt to global regulatory frameworks ?

adapt to global regulatory frameworks ?

Absolutely.

’s validation techniques universally align across all clinical trial reports submitted to global regulatory bodies.

’s validation techniques universally align across all clinical trial reports submitted to global regulatory bodies. - Is

user-friendly and easy to integrate into existing systems ?

user-friendly and easy to integrate into existing systems ?

Yes, the architectural design of

facilitates its integration with the existing SCE using APIs. It can perform validation and verification activities independently, as well.

facilitates its integration with the existing SCE using APIs. It can perform validation and verification activities independently, as well. - Who should use the

system ?

system ?

The prime users for

are Senior Biostatisticians, Sr Clinical Programmers, Clinical Data Validation within the biometrics/biostatistical team of Regulatory, Innovative Pharmaceuticals, CROs, and Research Institutions.

are Senior Biostatisticians, Sr Clinical Programmers, Clinical Data Validation within the biometrics/biostatistical team of Regulatory, Innovative Pharmaceuticals, CROs, and Research Institutions. - What is the level of expertise required to use

?

?

’s user interface has been creatively designed to help clinical programmers and statistical programmers work effortlessly. It supports easy navigation and provides guidance to users, which can be beneficial for individuals with varying levels of expertise.

’s user interface has been creatively designed to help clinical programmers and statistical programmers work effortlessly. It supports easy navigation and provides guidance to users, which can be beneficial for individuals with varying levels of expertise. - Will

be a GxP-compliant system ?

be a GxP-compliant system ?

Yes,

will be designed to comply with all applicable GxP requirements and US FDA Guidance documents.

will be designed to comply with all applicable GxP requirements and US FDA Guidance documents.

This website uses cookies to enhance your browsing experience and provide personalized content. These cookies help us track your interaction with the website and remember your preferences, analyze site traffic, and improve our services.

By continuing to use the website, you consent to the use of cookies. However, if you prefer not to have cookies stored on your device, you can click 'Cookie Manager' to customize your preferences. To learn more about how we use cookies and protect your privacy, please review our

Privacy Policy.